Bias-Safe AI is becoming a foundational requirement for banks as artificial intelligence increasingly influences credit decisions, fraud detection, customer engagement, and risk management. Beyond improving efficiency, banks must ensure that Bias-Safe AI systems operate fairly, transparently, and in line with regulatory and ethical expectations, especially in environments where biased outcomes can directly impact customers’ financial access and trust. This is why the following five essential practices outline how banks can operationalize responsibility while embedding bias mitigation directly into their AI strategy, governance, and delivery lifecycle.

>> Click here to read more about AI trends in BFSI!

1. Bias-Safe AI as a Core Pillar of Responsible Banking Innovation

Bias-Safe AI represents a strategic commitment by banks to ensure that artificial intelligence systems deliver value without reinforcing historical inequalities or introducing new forms of discrimination. As AI increasingly shapes lending, fraud detection, and customer decisioning, banks must embed fairness, transparency, and accountability into every stage of the AI lifecycle, from data selection and model training to deployment and post-implementation monitoring. Treating bias mitigation as a foundational design principle, rather than a compliance afterthought, enables banks to build durable trust with customers, regulators, and internal stakeholders while scaling Bias-Safe AI-driven innovation responsibly.

2. What is Built-in Responsible AI?

A framework known as “responsible AI” makes guarantee that judgments made by AI or machine learning models are just, open, and considerate of individuals’ privacy. Financial institutions are additionally empowered by the framework’s explainability, dependability, and human-in-the-loop (HITL) architecture, which provides safeguards against AI hazards. Conversely, Built-in Responsible AI provides banks with an easy option to establish equitable AI and machine learning rules and processes without sacrificing the functionality of their system. Options that provide more equitable decision-making are offered to banks. Banks are free to choose the framework that best suits their needs and are not required to choose any of these alternatives.

At various phases of model construction or training, biases may appear. A model may acquire biases that the model’s creators did not anticipate when it self-learns throughout production. Beyond that, prejudice may even seep into the bank’s internal policies and the people in charge of making choices that affect the financial security of its clients. This implies that banks may refuse to provide crucial financial services, such as granting qualified people access to bank accounts, credit decisions cards, bill payment, or loan approval. They are unjustly financially excluded because a machine learning algorithm determined they belong to a “high-risk” neighborhood or are the “wrong” gender. This is not intentional.

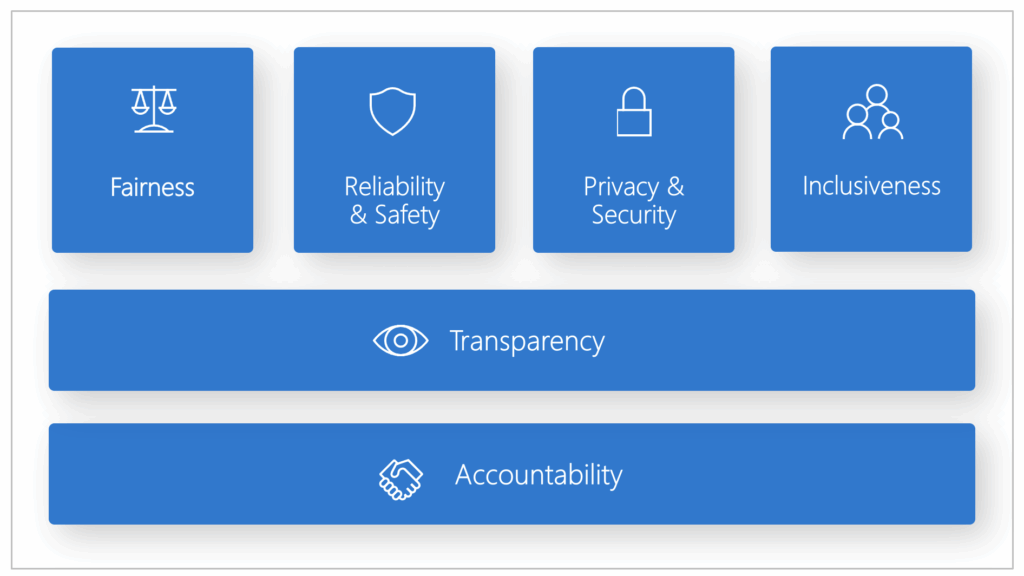

2.1 Core Principles of Responsible AI Framework:

According to Microsoft (2025), this framework includes six core principles, such as fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. These principles are the foundation of a responsible and trustworthy approach to AI, especially as intelligent technology becomes more common in everyday products and services.

- Fairness & Inclusiveness: Minimizing bias and ensuring equitable outcomes for all groups.

- Transparency & Explainability: Making AI decisions understandable and interpretable.

- Accountability: Establishing clear responsibility for AI system outcomes.

- Privacy & Security: Protecting data and ensuring AI systems are robust against threats.

- Reliability & Safety: Ensuring AI performs consistently and safely as intended.

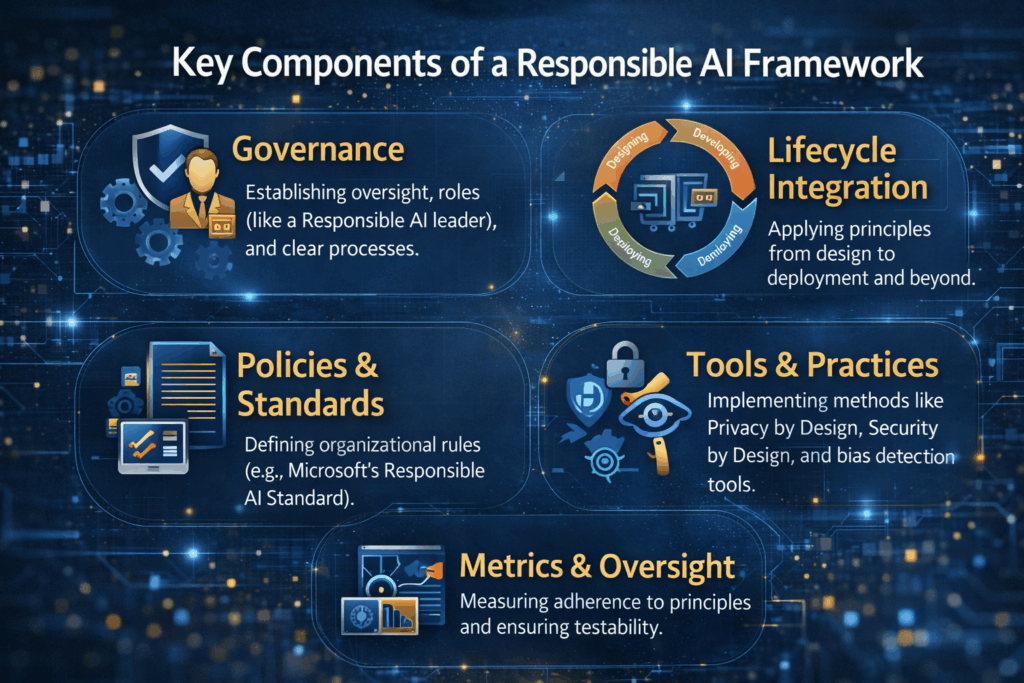

2.2 Key Components of a Responsible AI Framework:

While each organization will adapt governance structures according to size, industry, and regulatory environment, most frameworks share several foundational components, especially for BFSI industry.

- Governance: Establishing oversight, roles (like a Responsible AI leader), and clear processes.

- Lifecycle Integration: Applying principles from design to deployment and beyond.

- Policies & Standards: Defining organizational rules (e.g., Microsoft’s Responsible AI Standard).

- Tools & Practices: Implementing methods like Privacy by Design, Security by Design, and bias detection tools.

- Metrics & Oversight: Measuring adherence to principles and ensuring testability.

3. Why Built-in Responsible AI is Critical for Banks

Banks will need to keep an eye out for prejudice as AI technology becomes more and more common in financial services. This is a mission-critical approach given the rise in popularity of new AI-based technologies. For instance, a recent research on biases in generative AI revealed that the text-to-image model believed that prompts like “fast-food worker” and “social worker” are inhabited by darker-skinned women, whereas “high-paying” vocations like “lawyer” or “judge” are occupied by lighter-skinned men.

Unfortunately, AI is more prejudiced than reality in this instance. Just 3% of the pictures produced by the text-to-image model for the term “judge” featured women. In actuality, women make up 34% of US judges. This illustrates the significant hazards of inadvertent prejudice and discrimination in AI, which have a detrimental effect on business operations, public views, and consumer lives.

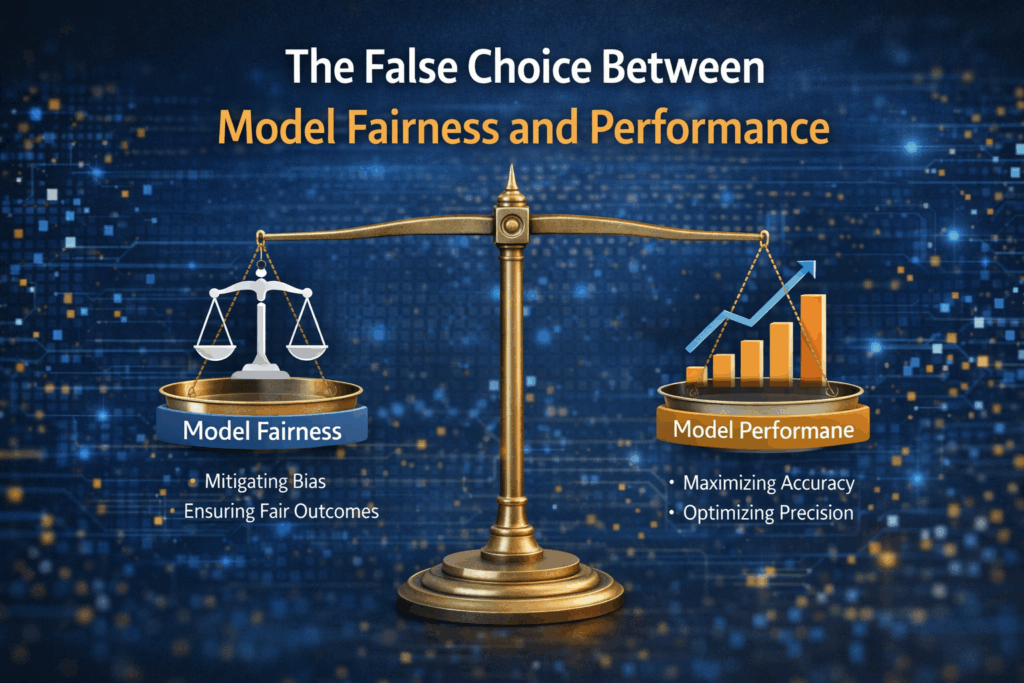

4. The False Choice Between Model Fairness and Performance

Regretfully, banks are frequently persuaded that they must compromise fairness for fraud detection performance, and vice versa, optimizing their models for optimal efficiency and performance over fairness. without a precise method of measuring both. Because of this, many banks are compelled to put performance first in order to increase their profits. Responsible AI and model fairness are viewed as “nice to have” agenda items. However, if model fairness is not given priority, biases may inadvertently enter a bank’s model.

This is a challenging strategy for banks, to put it frankly. It’s not only a bad decision, but it’s also a dangerous one that might hurt banks if they disregard biases in their models for an extended period of time. The bank may face serious PR problems and perhaps legal action if too many client groups feel they were refused services due to their age, gender, color, zip code, or other socioeconomic characteristics.

5. Reframing Fairness and Performance as Complementary Objectives

Leading banks are increasingly recognizing that fairness and performance are not mutually exclusive goals, but interdependent dimensions of high-quality AI systems. When bias is proactively identified and mitigated, models often generalize better, behave more consistently across customer segments, and produce more stable outcomes over time.

In practice, this means shifting from single-metric optimization toward multi-objective evaluation frameworks that balance accuracy, fairness, robustness, and explainability. By doing so, banks can achieve strong predictive performance while reducing long-term operational, regulatory, and reputational risk.

6. Embedding Bias Mitigation into the AI Delivery Lifecycle

Bias mitigation is most effective when embedded directly into the AI lifecycle rather than applied as a post-hoc control. This includes representative data sourcing, bias-aware feature engineering, fairness testing during model training, and continuous monitoring after deployment to detect drift or emergent bias. Human-in-the-loop review mechanisms further ensure that automated decisions remain contestable and aligned with institutional values. Over time, this lifecycle-driven approach enables banks to evolve their models responsibly as customer engagement, regulations, and societal expectations change.

7. Organizational Enablement and Cultural Readiness

Technology alone cannot deliver Bias-Safe AI without organizational readiness. Banks must equip cross-functional teams including data scientists, risk management officers, compliance leaders, and business owners with shared accountability for responsible AI outcomes. Clear escalation paths, training programs, and executive sponsorship are essential to ensure that fairness considerations influence real decision-making, not just documentation. When responsible AI becomes part of the bank’s operating culture, ethical safeguards are reinforced through everyday practices rather than enforced solely through audits.

8. Moving from Principles to Practice

The path forward for banks lies in adopting Built-in Responsible AI solutions that integrate governance, fairness testing, explainability, and monitoring into a unified framework. Rather than treating bias mitigation as a constraint, banks should view it as a strategic enabler of sustainable AI scale and long-term trust. Institutions that act now will be better positioned to innovate confidently while meeting regulatory expectations and customer demands. If your organization is exploring how to operationalize Bias-Safe AI in BFSI, now is the time to assess your AI maturity and take the next step toward responsible, performance-driven deployment.