AI (Artificial intelligence) is moving decisively from experimentation to infrastructure. By 2026 and beyond, AI will no longer be framed as a competitive advantage reserved for leading innovators. It will become a baseline capability that reshapes how economies operate, how organizations create value, and how individuals work and live. The next phase of AI is less about novelty and more about integration, governance, and real-world impact.

>> Click here to read the blog post about the future of AI in 2024!

This transition marks a structural shift. Similar to electricity or the internet in earlier eras, AI is becoming embedded across systems rather than deployed as isolated tools. Organizations that fail to adapt will not simply lose efficiency. They will struggle to remain relevant in markets where speed, intelligence, and adaptability are assumed.

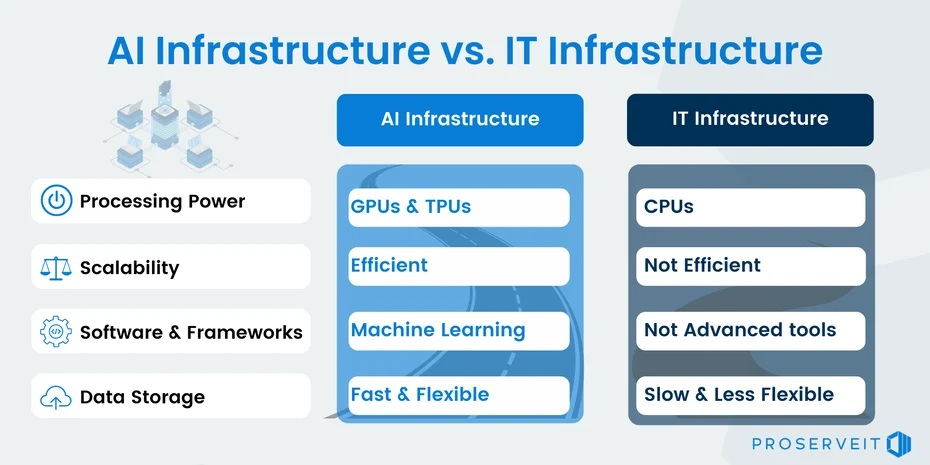

1. From Tools to Systems: AI as Foundational Infrastructure

In the years leading up to 2026, AI adoption was often characterized by pilots and proofs of concept. Chatbots, recommendation engines, and predictive analytics were implemented in silos, frequently disconnected from core operations. Beyond 2026, this model will no longer suffice. AI is increasingly designed as AI infrastructure and scalability, meaning it underpins workflows end to end. Data ingestion, decision logic, automation, and feedback loops are becoming tightly coupled. Rather than asking where AI can be added, organizations will ask how systems should be designed with AI at their core.

This infrastructural shift has several implications. First, reliability and uptime matter as much as model accuracy. Second, AI systems must scale across geographies, user groups, and regulatory environments. Third, integration with legacy systems becomes a strategic challenge rather than a technical afterthought. As a result, investment is moving away from one-off models toward platforms that support continuous learning, monitoring, and orchestration. AI maturity is no longer measured by how advanced a single model is, but by how seamlessly intelligence flows across the organization.

2. Enterprise AI Transformation Becomes Non-Negotiable

Between 2026 and 2030, Enterprise AI transformation will shift from an optional modernization initiative to a prerequisite for competitiveness. This transformation is not limited to technology stacks. It fundamentally alters organizational design, decision rights, and leadership models. Traditional enterprises have relied on hierarchical decision making and periodic planning cycles. AI-driven organizations operate differently. They emphasize real-time data, decentralized execution, and continuous optimization. Decisions that once took weeks are increasingly automated or supported by AI systems that update recommendations dynamically.

However, transformation at this scale exposes structural tensions. Many organizations still separate IT, data, and business units. AI does not respect these boundaries. Successful enterprises will be those that redesign governance, incentives, and talent models around cross-functional collaboration. Importantly, Enterprise AI transformation is not about replacing human judgment wholesale. It is about reallocating cognitive effort. Routine analysis and pattern recognition shift to machines, while humans focus on strategy, ethics, and complex trade-offs. Organizations that treat AI purely as a cost-cutting tool risk eroding trust and long-term capability.

3. Human–AI Collaboration Redefines Work

One of the most consequential shifts beyond 2026 will be the normalization of Human–AI collaboration. Early narratives around AI often framed the technology as a replacement for human labor. The emerging reality is more nuanced. AI excels at processing scale, consistency, and speed. Humans excel at context, empathy, and value judgment. The future of work lies in designing systems where these strengths reinforce each other. In practical terms, this means AI copilots embedded into daily workflows across professions.

Knowledge workers will increasingly interact with AI as a default interface. Drafting, analysis, simulation, and decision support become conversational and iterative. This changes skill requirements. Literacy in prompting, validation, and oversight becomes as important as domain expertise.

At the same time, Human–AI collaboration raises new questions about accountability. When decisions are co-produced by humans and machines, responsibility must be clearly defined. Organizations will need explicit frameworks that clarify where human override is required and how AI recommendations are audited. Beyond productivity, this collaboration reshapes professional identity. Roles evolve from execution to supervision, from production to curation. Those who adapt will find their leverage amplified. Those who resist may find their expertise increasingly marginalized.

4. The Rise of AI Governance and Regulation

As AI systems become embedded in critical infrastructure, AI governance and regulation moves from theory to enforcement. By 2026, most major economies are expected to have formal regulatory regimes governing AI development and deployment. These frameworks increasingly focus on risk rather than technology type. High-impact use cases such as finance, healthcare, public services, and employment are subject to stricter oversight. Requirements around transparency, explainability, data provenance, and human oversight are becoming standard.

For organizations, this introduces both constraints and clarity. Compliance is no longer a box-ticking exercise. It requires ongoing monitoring of models, data pipelines, and outcomes. Governance functions must be integrated into product and engineering teams rather than operating as external controls. At the same time, regulation creates a level playing field. Clear rules reduce uncertainty and enable long-term investment. Organizations that proactively embed AI governance into their operating model often gain trust advantages with customers, partners, and regulators. A critical challenge lies in global inconsistency. AI governance regimes vary across jurisdictions, creating complexity for multinational organizations. Navigating this landscape will require modular architectures and adaptive compliance strategies.

5. Data Becomes a Strategic Liability as Well as an Asset

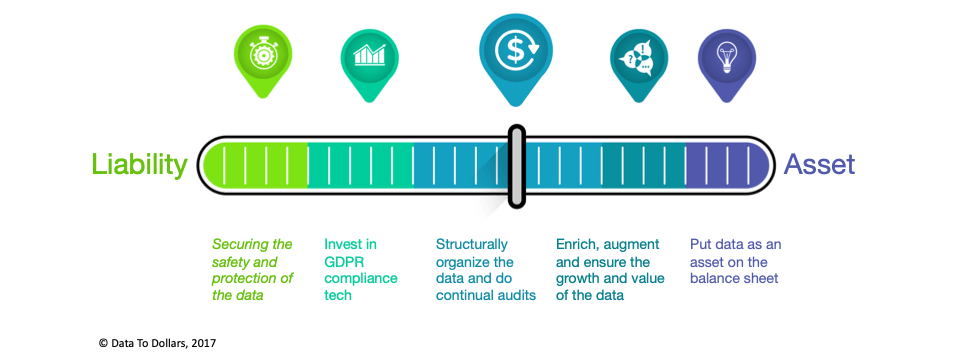

In earlier phases of AI adoption, data was widely described as the new oil. Beyond 2026, this metaphor becomes incomplete. Data remains a strategic asset, but it is also a growing liability. As AI systems scale, data quality, bias, and security risks compound. Poor data governance can propagate errors across automated decisions at unprecedented speed. High-profile failures have already demonstrated how reputational and legal risks can outweigh short-term gains.

This reality reinforces the importance of disciplined data management. Lineage, consent, and contextual integrity become non-negotiable. Organizations must treat data stewardship as a core competency rather than a support function. Moreover, synthetic data and simulation are increasingly used to complement real-world data. This reduces dependency on sensitive datasets while enabling robust testing. The ability to balance realism and control becomes a differentiating capability.

6. AI Infrastructure and Scalability as Competitive Moats

By the late 2020s, differentiation in AI will increasingly come from execution rather than access to models. As foundational models become commoditized, AI infrastructure and scalability emerge as durable competitive moats. Scalable AI systems require more than computational power. They depend on robust pipelines, monitoring, versioning, and fallback mechanisms. Resilience matters because downtime or degraded performance directly impacts core operations.

Organizations that invest early in scalable infrastructure benefit from compounding returns. Each new use case leverages existing capabilities rather than requiring bespoke solutions. This lowers marginal costs and accelerates innovation cycles. Conversely, organizations that rely on fragmented tools face escalating complexity. Technical debt accumulates, and the cost of change increases. Over time, this limits strategic flexibility.

7. Economic and Societal Implications Beyond the Enterprise

The impact of AI beyond 2026 extends well beyond organizational boundaries. At the macro level, AI influences productivity growth, labor markets, and income distribution. Countries that successfully integrate AI into education, infrastructure, and public services are likely to see disproportionate gains. However, these gains are uneven. Regions and populations with limited access to digital infrastructure risk being left behind. This raises questions of social responsibility and policy intervention.

Public institutions themselves are undergoing AI-driven transformation. From resource allocation to citizen services, AI is reshaping how governments operate. Transparency and accountability are especially critical in this context, as public trust is harder to rebuild once lost. Education systems face parallel pressures. Preparing individuals for Human–AI collaboration requires rethinking curricula, assessment, and lifelong learning. Technical skills alone are insufficient. Ethical reasoning, systems thinking, and adaptability become central.

Conclusion: From Acceleration to Stewardship

Beyond 2026, the defining challenge of AI is no longer acceleration but stewardship. The technology is powerful, pervasive, and increasingly invisible. Its impact depends less on what it can do and more on how it is governed, integrated, and aligned with human goals. Organizations that recognize this shift early will be better positioned to navigate uncertainty. They will build systems that are not only intelligent but also resilient, accountable, and trusted. In doing so, they will help shape a future where AI enhances human capability rather than undermining it. The future of AI is not predetermined. It will be shaped by deliberate choices made today, at the intersection of technology, governance, and human judgment.