ROI (Return on Investment) is the first question every executive asks when an AI initiative is proposed, and it is also the question that determines whether that initiative quietly dies as a pilot or earns the right to scale across the organization. While many leaders assume ROI can only be proven after full deployment, experienced teams know that early ROI signals appear much sooner. These signals, if correctly interpreted, provide strong predictive power about whether an AI initiative will scale successfully or collapse under cost, complexity, and organizational resistance.

>> Explore the post about four key metrics for agentic AI apps that are the best to maximise ROI!

This article examines the most reliable early ROI signals that indicate whether an AI initiative is structurally capable of scaling. Rather than focusing on lagging financial metrics, it emphasizes leading indicators that emerge during discovery, pilot, and early production stages.

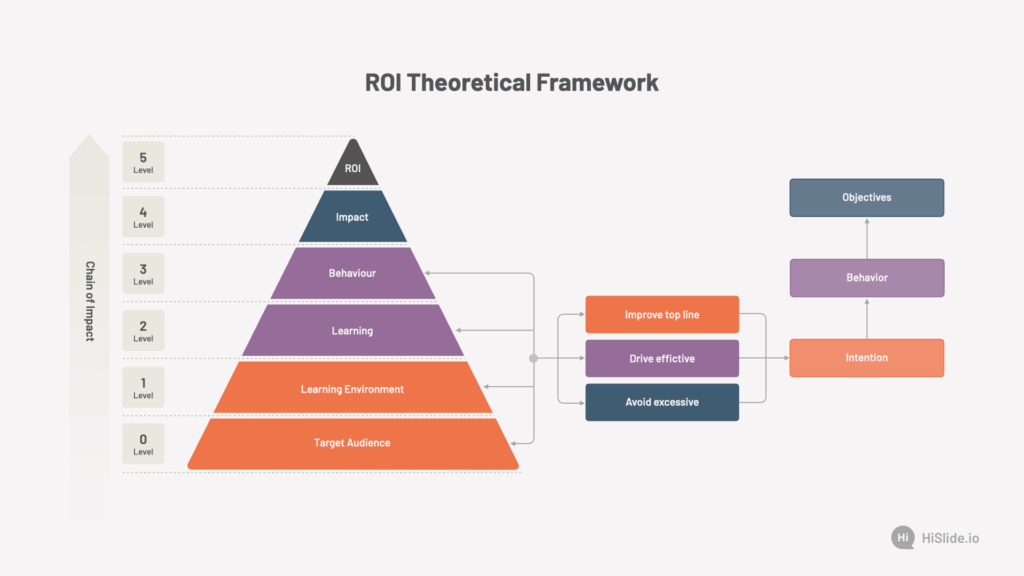

1. Why Traditional ROI Models Fail for AI

Conventional ROI frameworks were designed for deterministic systems. AI, by contrast, introduces uncertainty, learning curves, and non linear value creation. This creates three structural problems. First, value realization is delayed. Many AI benefits emerge only after models are trained, integrated, and adopted by users. Second, costs are front loaded. Data preparation, experimentation, and iteration consume resources before value becomes visible. Third, benefits are often indirect. AI may not immediately reduce headcount or cost but instead improve decision quality, speed, or risk management.

As a result, organizations that wait for “hard ROI” before scaling often kill initiatives that were strategically sound. Conversely, organizations that scale based on hype often discover too late that value cannot be sustained. The solution is to identify early ROI signals that correlate strongly with long term scalability.

2. Signal 1: Clear Economic Ownership of the Problem

The strongest early ROI signal is not technical performance but economic clarity. Scalable AI initiatives are anchored to a problem that already has a visible economic owner. This means someone in the business can answer, with reasonable confidence:

- What does this problem cost us today?

- Where does that cost sit in the P&L or balance sheet?

- Who is accountable for reducing it?

When AI teams struggle to quantify baseline cost or impact, scaling risk increases dramatically. In contrast, when a business owner can articulate cost leakage, revenue loss, or risk exposure, AI outcomes can be translated into financial language early. This signal predicts scalability because it ensures alignment between technical success and business incentives. Without economic ownership, pilots remain “interesting experiments” rather than investable assets.

3. Signal 2: Measurable Proxy Metrics Before Financial Impact

Early stage AI initiatives rarely deliver immediate financial gains. However, scalable initiatives define proxy metrics that reliably precede financial outcomes.

Examples include:

- Reduction in decision cycle time

- Increase in forecast accuracy

- Decrease in exception handling

- Improvement in precision or recall tied to operational thresholds

These metrics matter only when they are causally linked to downstream financial impact. For example, improving forecast accuracy is meaningful only if it affects inventory levels, working capital, or service levels. When teams agree early on which proxy metrics matter and how they translate into ROI, scaling becomes a rational investment decision rather than a leap of faith.

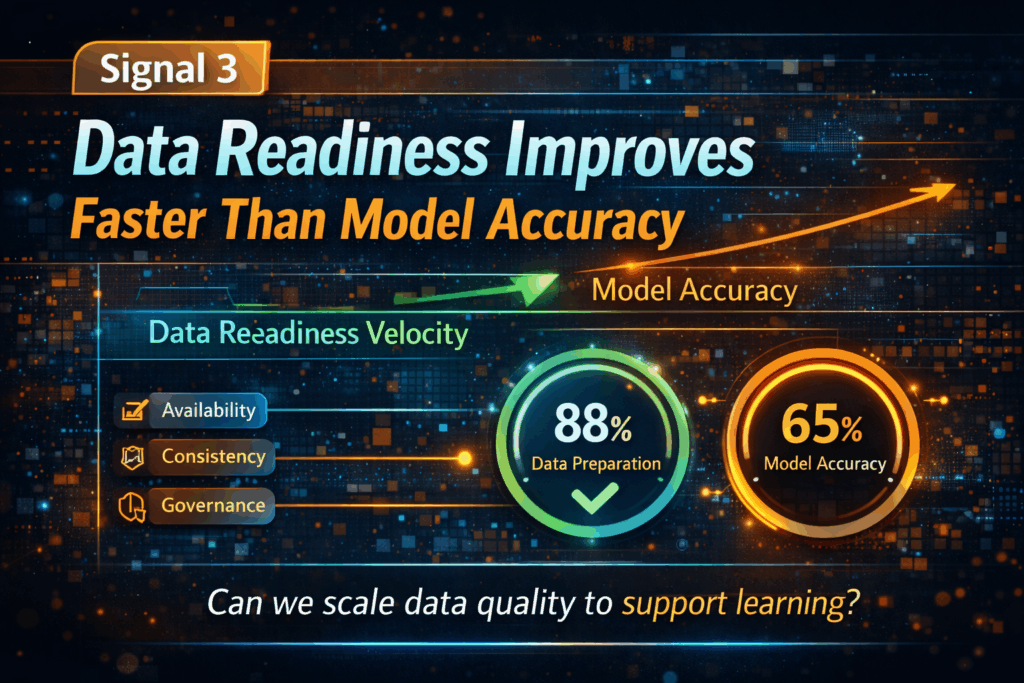

4. Signal 3: Data Readiness Improves Faster Than Model Accuracy

A common misconception is that early ROI depends primarily on model performance. In reality, data readiness velocity is a far stronger predictor of scalability.

Scalable AI initiatives show rapid improvement in:

- Data availability

- Data consistency across sources

- Label quality or feedback loops

- Governance and access controls

Even if early model accuracy is modest, fast progress in data readiness indicates that the organization can support learning at scale. Conversely, teams that achieve impressive early accuracy on brittle, manually curated data often fail when moving to production.

Data readiness predicts ROI scalability because it determines marginal cost. When each new deployment requires heroic data engineering, ROI deteriorates rapidly as scope expands.

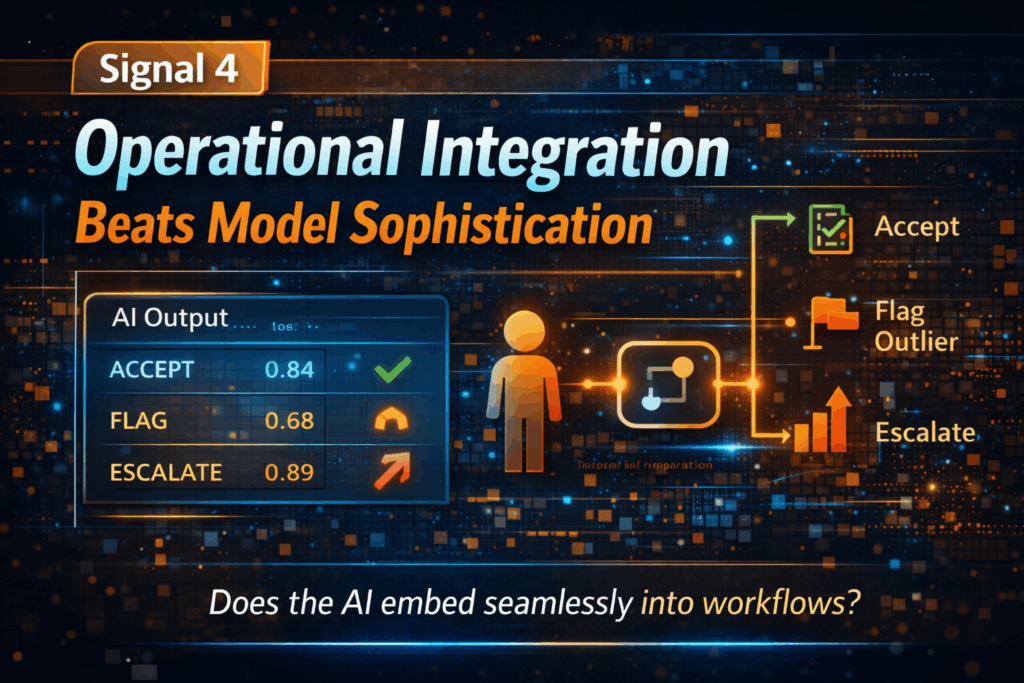

5. Signal 4: Operational Integration Beats Model Sophistication

Another reliable early signal is how easily AI outputs integrate into real workflows. Scalable initiatives prioritize integration over sophistication.

Key questions include:

- Does the AI output align with how decisions are actually made?

- Can frontline teams act on the output without retraining their mental models?

- Is there a clear action path tied to each prediction or recommendation?

Early ROI appears when AI reduces friction rather than adds cognitive load. If users must interpret complex scores without guidance, adoption stalls and value leakage occurs. Initiatives that embed AI directly into existing systems, alerts, or decision gates tend to scale because incremental rollout requires minimal behavioral change.

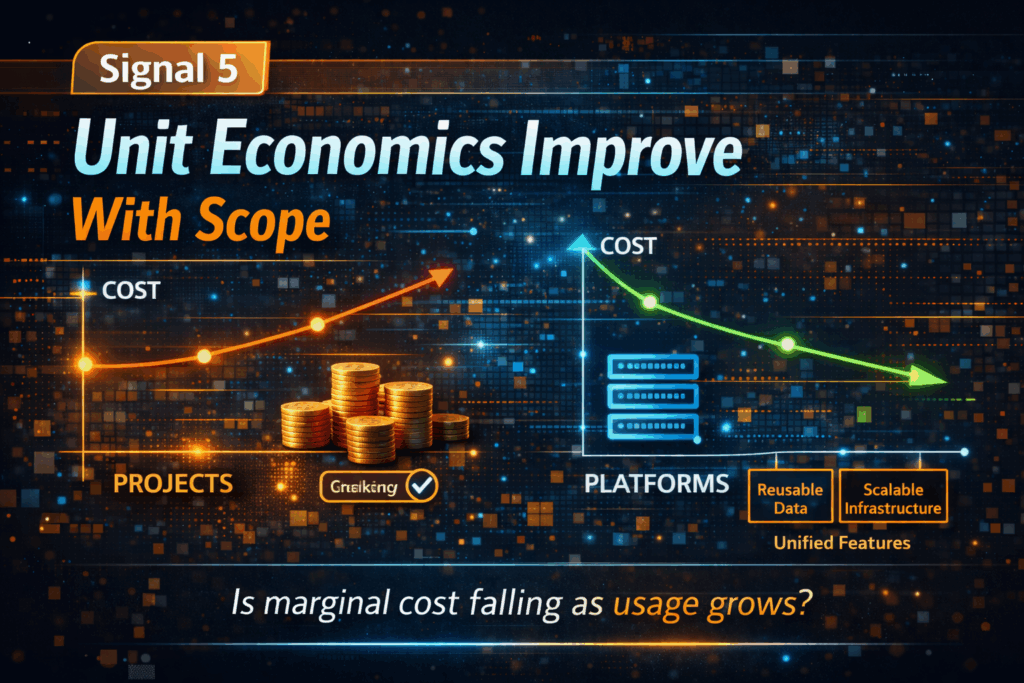

6. Signal 5: Unit Economics Improve With Scope

A powerful but often overlooked early signal is unit economics behavior. Even in pilots, teams can observe whether cost per decision, prediction, or transaction decreases as volume increases. Positive signals include:

- Shared data pipelines across use cases

- Reusable features or embeddings

- Centralized model serving or monitoring

If each new use case requires bespoke infrastructure, custom labeling, and unique workflows, scaling will erode ROI. In contrast, when marginal cost declines with reuse, ROI compounds as scope expands. This signal matters because it transforms AI from a project into a platform. Platforms scale economically; projects do not.

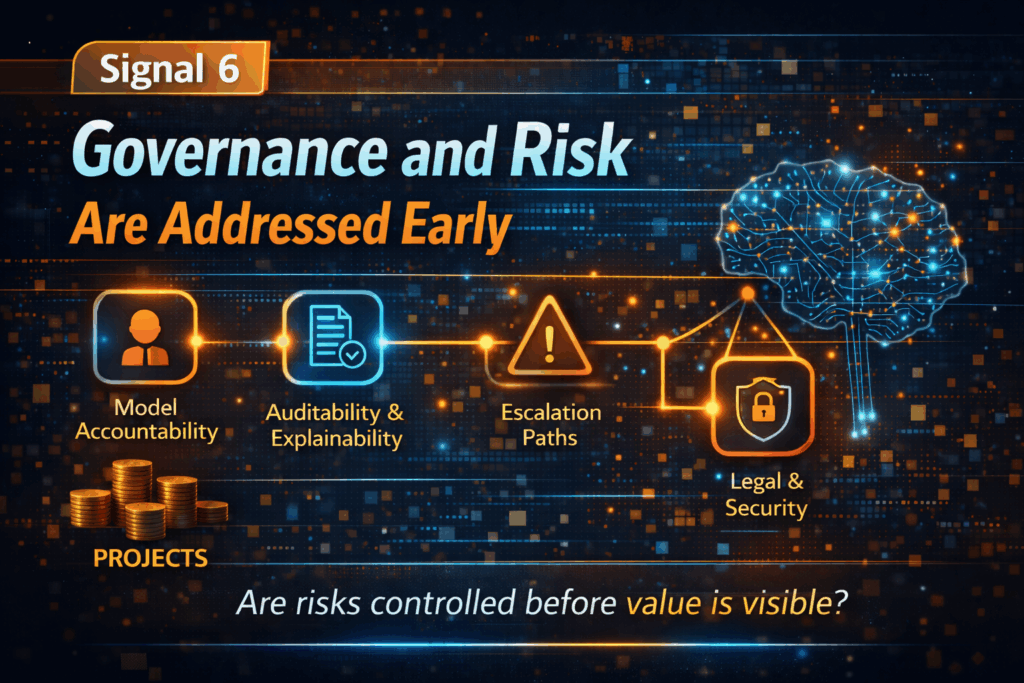

7. Signal 6: Governance and Risk Are Addressed Early

Many AI initiatives fail at scale not because they lack value, but because they trigger governance friction too late. Scalable initiatives address risk early, even when impact is small.

This includes:

- Clear model ownership and accountability

- Auditability and explainability aligned with use case risk

- Defined escalation paths for model failure

- Alignment with legal, compliance, and security teams

Early ROI signals appear when governance accelerates deployment rather than blocking it. This happens when guardrails are built once and reused, reducing approval cycles over time. Ignoring governance during pilots creates hidden liabilities that surface precisely when scaling begins.

8. Signal 7: Business Pull Replaces Technical Push

Perhaps the most telling early ROI signal is who is driving expansion. When scaling is driven by business demand rather than technical advocacy, ROI is likely to materialize. Signs of business pull include:

- New teams requesting access to the solution

- Budget owners proposing expansion use cases

- Operational leaders defending the initiative during prioritization reviews

This signal reflects perceived value. Even before formal ROI is calculated, business pull indicates that the initiative is solving a real problem better than existing alternatives. AI that scales because it is “strategically important” but lacks grassroots demand often struggles to justify continued investment.

9. Interpreting Early ROI Signals

No single signal guarantees scalability. However, when multiple signals appear together, predictive confidence increases significantly. For example such as strong economic ownership plus improving proxy metrics suggests financial relevance, fast data readiness plus reusable infrastructure suggests cost efficiency and operational integration plus business pull suggests adoption durability.

Conversely, warning signs emerge when:

- ROI narratives remain abstract

- Metrics shift frequently

- Data pipelines remain fragile

- Adoption depends on executive mandates rather than user demand

From signals to scaling decisions: The practical implication is clear. Organizations should not ask, “Has this AI delivered ROI yet?” Instead, they should ask, “Are the early ROI signals strong enough to justify scaling investment?”

This reframing allows leaders to: allocate capital more intelligently, kill initiatives early when signals are weak and ouble down when signals align, even if financial impact is still emerging. AI scaling is less about prediction accuracy and more about economic momentum. Early ROI signals reveal whether that momentum exists.

Conclusion

ROI remains the ultimate measure of AI success, but waiting for perfect financial proof before scaling is a strategic mistake. The organizations that win with AI learn to read early signals that predict scalable value. Clear economic ownership, meaningful proxy metrics, accelerating data readiness, operational integration, improving unit economics, proactive governance, and genuine business pull together form a reliable early warning system. When these signals align, AI initiatives do not need persuasion to scale. They earn it. For leaders navigating AI investment decisions, the ability to interpret these early ROI signals may be the most valuable capability of all.